Observability 101: A Guide to Azure Event Hubs

Introduction

In today's fast-paced digital world, handling large volumes of data in real-time is a crucial requirement for many organizations. Azure Event Hubs, a fully managed real-time data ingestion service, provides a scalable solution for collecting and processing large streams of data. This blog post delves into the key features of Azure Event Hubs, its use cases, and provides examples to illustrate its functionality.

What is Azure Event Hubs?

Azure Event Hubs is a big data streaming platform and event ingestion service capable of receiving and processing millions of events per second. It acts as the "front door" for an event pipeline, allowing data to be ingested and made available for stream processing and real-time analytics.

Key Features of Azure Event Hubs

- High Throughput:

- Event Hubs can process millions of events per second, making it suitable for high-volume data ingestion.

- Scalability:some text

- The service can automatically scale to handle increased loads, ensuring reliable performance even under heavy data influx.

- Partitioned Consumer Model:

- Event Hubs supports partitioning, allowing multiple consumers to read and process data in parallel.

- Real-time and Batch Processing:

- Supports both real-time event streaming and batch processing, offering flexibility in how data is handled.

- Retention:

- Events can be retained for a specified duration (up to seven days), allowing for delayed processing and replay of data.

- Security:

- Provides robust security features, including encryption, Shared Access Signatures (SAS), and Virtual Network Service Endpoints.

- Integration with Azure Ecosystem:

- Seamlessly integrates with other Azure services like Azure Stream Analytics, Azure Functions, and Azure Data Lake for comprehensive data processing and analytics solutions.

How Azure Event Hubs Works

Azure Event Hubs follows a publish-subscribe model. Producers send events to the Event Hub, and consumers (or subscribers) read and process these events.

- Event Producers:

- These are applications or devices that send data to the Event Hub. Producers can use various protocols (like AMQP or HTTPS) to push events.

- Event Hubs:

- An Event Hub is a collection of events. It consists of partitions, which provide a way to distribute events across multiple consumers.

- Event Consumers:

- These are applications or services that read and process data from the Event Hub. Consumers connect to specific partitions and read events.

- Event Processors:

- Event Processors are responsible for processing events. They can be custom applications, Azure Stream Analytics jobs, or Azure Functions.

Key Components

- Namespace:

- A container for Event Hubs, providing a unique DNS name for your service endpoint.

- Event Hub:

- The core entity that holds event data, consisting of multiple partitions.

- Partitions:

- Logically separate sequences of events, allowing parallel consumption.

- Consumer Groups:

- Views (or states) of an entire Event Hub, enabling multiple independent readers to process the event stream concurrently.

Using Azure Event Hubs

Creating an Event Hub Namespace and Event Hub

- Create an Event Hub Namespace:

- Go to the Azure portal and search for "Event Hubs".

- Click "Create" and fill in the necessary details to create a new namespace.

- Create an Event Hub:

- Within the namespace, create a new Event Hub.

- Specify the name, partition count, and retention period.

Sending Events to Event Hubs

You can send events to Event Hubs using various SDKs (like .NET, Java, Python) or REST APIs. Here's an example using Python:

python

Copy code

from azure.eventhub import EventHubProducerClient, EventData

# Create a producer client to send events to the Event Hub

producer = EventHubProducerClient.from_connection_string(conn_str="YourConnectionString", eventhub_name="YourEventHubName")

# Create a batch of events

event_data_batch = producer.create_batch()

# Add events to the batch

event_data_batch.add(EventData('First event'))

event_data_batch.add(EventData('Second event'))

event_data_batch.add(EventData('Third event'))

# Send the batch of events to the Event Hub

producer.send_batch(event_data_batch)

# Close the producer

producer.close()

Receiving Events from Event Hubs

Consumers read events from the Event Hub. Here's an example using Python:

python

Copy code

from azure.eventhub import EventHubConsumerClient

def on_event(partition_context, event):

# Print the event data.

print("Received event from partition: {}".format(partition_context.partition_id))

print("Event data: {}".format(event.body_as_str()))

# Update the checkpoint.

partition_context.update_checkpoint(event)

# Create a consumer client to read events from the Event Hub

consumer = EventHubConsumerClient.from_connection_string(conn_str="YourConnectionString", consumer_group="$Default", eventhub_name="YourEventHubName")

# Receive events from the Event Hub

with consumer:

consumer.receive(

on_event=on_event,

starting_position="-1" # "-1" is from the beginning of the partition.

)

Use Cases for Azure Event Hubs

- Telemetry Ingestion:

- Collect telemetry data from IoT devices, applications, and services in real-time.

- Log and Event Data Collection:

- Aggregate log and event data from various sources for monitoring, troubleshooting, and analysis.

- Stream Processing:

- Process and analyze real-time data streams using Azure Stream Analytics or custom processing applications.

- Data Archiving:

- Store raw event data in Azure Blob Storage or Azure Data Lake for long-term storage and batch processing.

Best Practices for Using Azure Event Hubs

- Partitioning Strategy:

- Design an appropriate partitioning strategy to balance load and optimize parallel processing.

- Security:

- Use Shared Access Signatures (SAS) and encryption to secure data in transit and at rest.

- Monitoring and Alerting:

- Implement monitoring and alerting to track the performance and health of your Event Hub.

- Checkpointing:

- Use checkpointing to keep track of processed events and ensure reliable event processing.

Throttling and Backpressure:

- Implement throttling and backpressure mechanisms to handle high load and prevent overloading consumers.

Conclusion

Azure Event Hubs is a powerful and scalable service for real-time data ingestion and processing. Its ability to handle massive data streams and integrate seamlessly with the Azure ecosystem makes it an essential tool for building robust data pipelines. By understanding its key features, usage patterns, and best practices, you can harness the full potential of Azure Event Hubs to drive real-time insights and enhance your observability capabilities. Whether you're dealing with telemetry data, log aggregation, or stream processing, Azure Event Hubs provides the flexibility and performance needed to manage your data effectively.

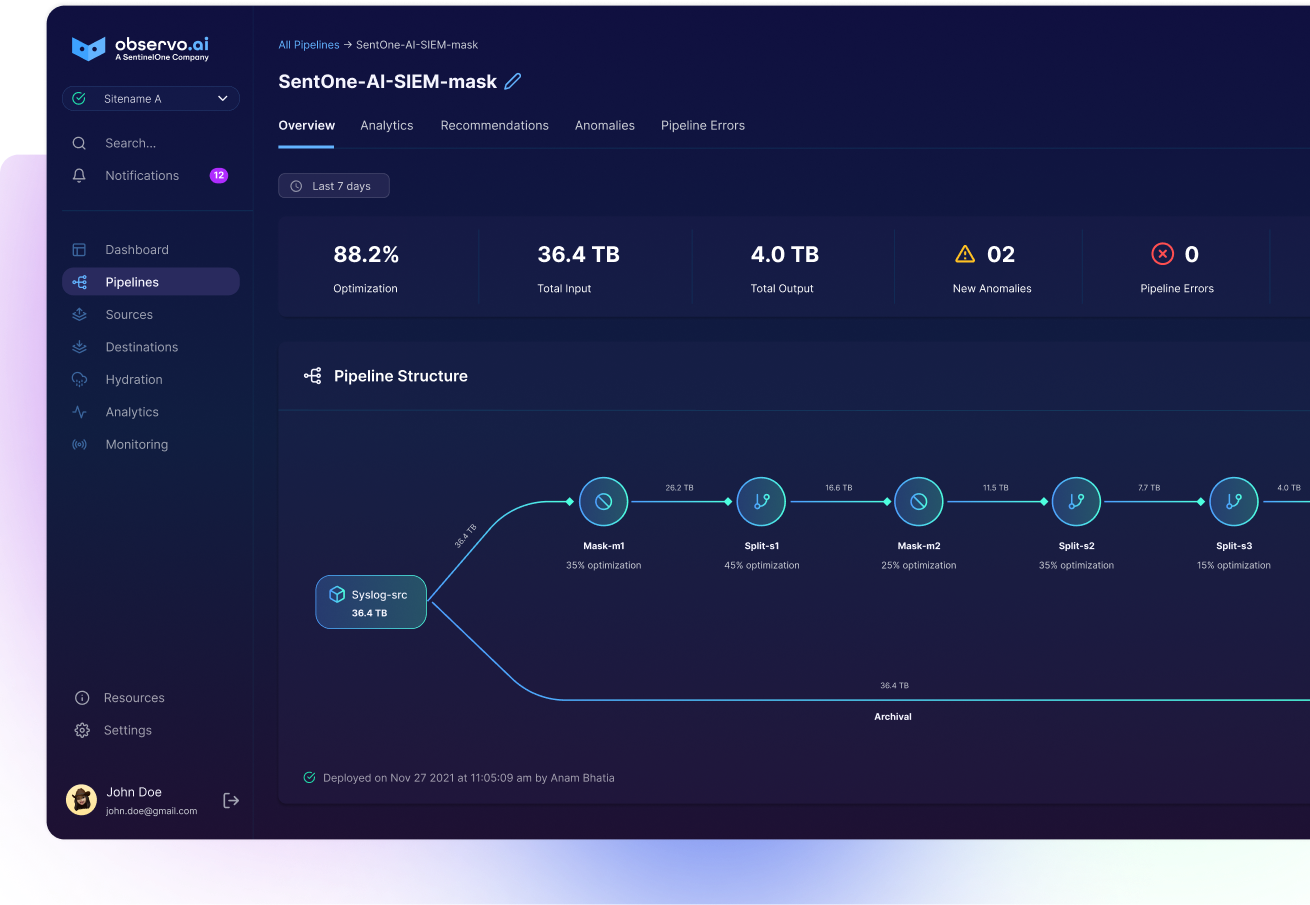

To automate many of these capabilities, use an AI-powered observability pipeline like Observo AI. Observo AI elevates observability with much deeper data optimization, and automated pipeline building, and makes it much easier for anyone in your organization to derive value without having to be an expert in the underlying analytics tools and data types. Observo AI helps you break free from static, rules-based pipelines that fail to keep pace with the ever-changing nature of your data. Observo AI helps you automate observability with a pipeline that constantly learns and evolves with your data.